AI in Cybersecurity: Promises vs Pitfalls — What Machines Still Miss

In the last few years, artificial intelligence (AI) has been on everyone's lips in the field of cybersecurity. As it speeds up threat detection, resolves problems in real-time, and provides automated defense, a lot of money is going toward investments in these AI tools and systems simply because it offers seemingly unparalleled efficiencies. However, there are still important limitations for AI. This article will discuss the capabilities of AI in cybersecurity, the associated challenges enterprises often encounter, and ultimately the rationale for why human judgment and expertise are necessary.

What AI Promises in Cybersecurity

Artificial intelligence offers some clear benefits in cyber operations:

- Automated threat detection and real-time monitoring: AI/ML tools can analyze large volumes of log data, network traffic, user behaviour, and alert on anomalies in a time-frame that no human could ever achieve.

- Scaling security operations: Organizations with little security staff might seek full automation of certain tasks or embrace automation to stretch their existing coverage to a wider scope, more endpoints and additional attack surfaces.

- Predictive analytics and threat intelligence: AI could help identify patterns of behaviours associated with certain types of incidents or assets being targeted. It assists organizations with recognizing attack vectors and behaviours before they are exploited, as well as performing proactive risk assessments prior to a breach.

- Improved efficiencies and cost savings: Many security processes such as vulnerability scanning, phishing simulation and malware signature detection can all be done either partially or fully automated, allowing organizations to save time and immensely valuable resources.

There are many great benefits of using AI in cybersecurity, but these benefits have trade-offs.

Common Pitfalls and Limitations

Despite the advantages, AI is not a silver bullet. Below are key pitfalls often missing in marketing claims but highly relevant in practice:

- False Positives / False Negatives & Alert Fatigue: AI systems tend to generate alerts — sometimes too many. False positives can overwhelm security teams, leading to “alert fatigue,” where genuinely dangerous alerts may be ignored. False negatives are even more dangerous, as missed threats can slip through unnoticed.

- Data Quality, Bias, and Model Poisoning: AI depends heavily on the training data. If the data is poor, biased, outdated or deliberately poisoned by adversaries, the AI’s decisions will be flawed. This could mean misclassifying attacker behaviour or failing to detect emerging threats.

- Inability to Handle Zero-Day, Novel or Context-Specific Threats: AI often works well with known patterns. However, zero-day exploits, polymorphic malware, or social engineering attacks that exploit human psychology are harder for AI tools to detect reliably. Contextual awareness and creative thinking are needed.

- Black-Box Behaviour, Explainability & Auditing Issues: Many AI/ML models (especially deep learning) are opaque. It can be hard to trace why a particular decision was made, which undermines auditing, compliance, and trust. Regulatory standards often require transparency.

- Ethical, Privacy, and Regulatory Risks: Dealing with sensitive information is synonymous with security practice and policy. AI systems may ask for terabytes of user or system data. Sensitive information may raise questions of user privacy and ethics. A failure to use sensitive data properly, biases in algorithmic decision-making, or unauthorize access to sensitive data through an API could expose your organization to data privacy laws and policies.

- Operational Costs, Integration, and Skill Gaps: There are back-end operational costs to implementing, maintaining ongoing retraining/updates of AI tools. Additionally, integrating AI tools into existing tech stacks and achieving interoperability will likely require additional security and operational overhead. Finally, hiring capable staff to identify, develop, and manage AI tools is challenging given the limited talent availability who understand AI, have cybersecurity knowledge, and are domain-contextual aware.

Secure AI — The Right Way

CyberCube helps organizations adopt AI responsibly with explainable models, bias monitoring, and human validation at every layer.

Instances in which AI has shown some limitations

To exemplify:

- Phishing and Social Engineering:Phishing emails designed by AI look far more realistic than before. Even trained experts may struggle to spot them. Since detections rely on patterns, new or personalized forms of phishing often go undetected.

- Adversarial Attacks and Evasion of Models: Attackers intentionally craft inputs (adding noise, small changes, etc.) to fool AI models and avoid detection.

- Data Leak via AI Tools: Staff using public LLMs or AI services might unknowingly upload confidential or proprietary data, risking irreversible data exposure.

These examples are meaningful limitations outside of theoretical vulnerabilities — they are real issues that affect organisations today.

The Supremacy of Human Skill

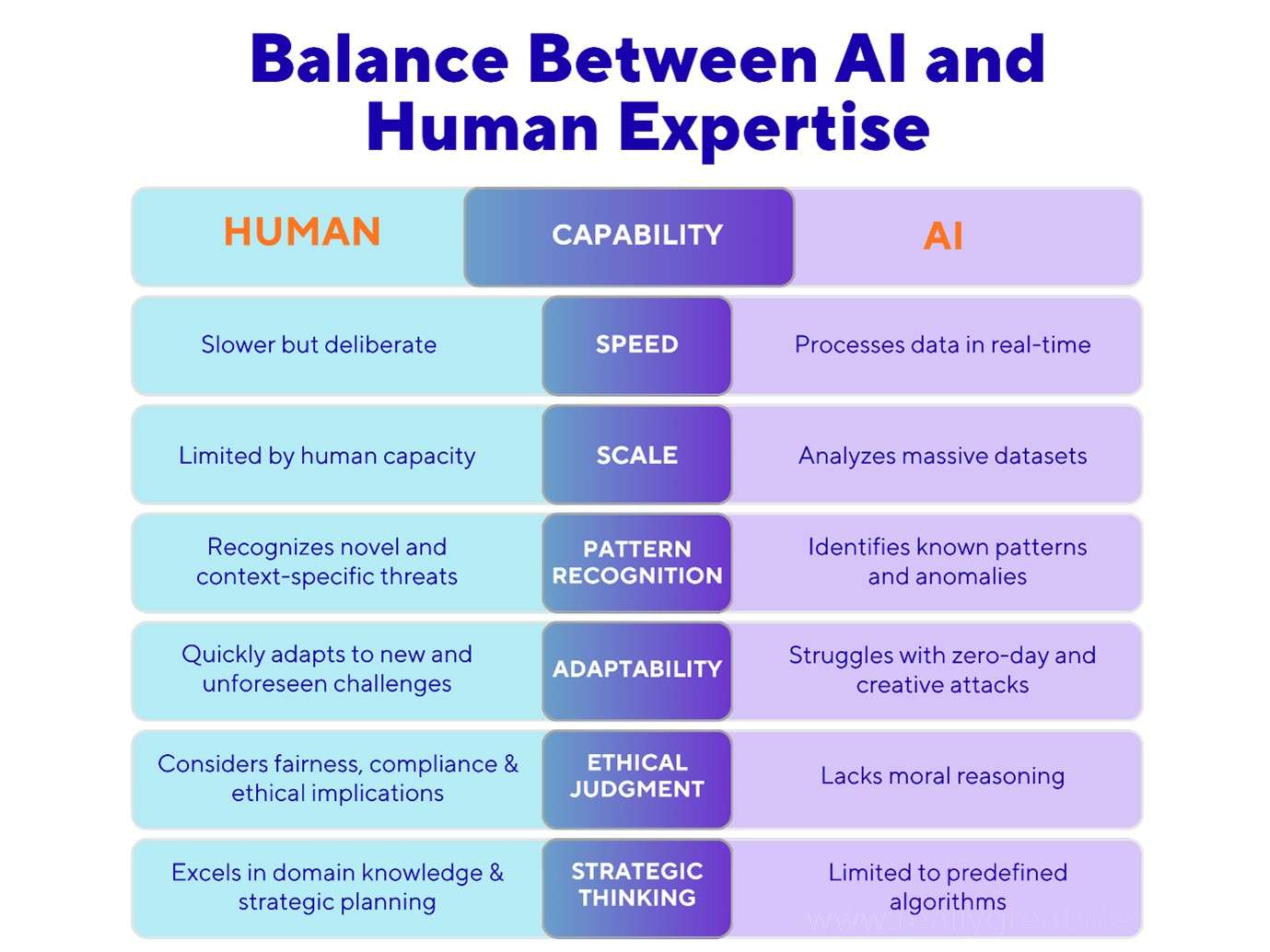

While AI technologies offer speed and scale that exceeds the ability of humans, they will never surpass humans in many areas of cybersecurity:

- Contextual foresight and intuition: human experts can look beyond patterns. They incorporate knowledge of industry risk, the motivation of attackers, possible business impacts, and regulators.

- Critical thinking and adaptivity: when attacks diverge from existing patterns, or the adversary shows ingenuity, a person will think and adjust in real time. AI models will process too slowly, misclassify, and run out of data.

- Ethical judgment and accountability: Humans apply moral and legal reasoning; when AI errs, human oversight ensures responsibility.

- Strategic threat hunting and investigations: Cross-domain intelligence and creative analysis remain uniquely human strengths.

- Trust and communication: human experts will communicate not just the risk to leadership, or opinion of choices regarding response, or where a control should be, they will explain why communication dimensions are important and how to leverage dashboards to understand the risk.

The Balance Between AI and Human Expertise

Best Practices: How to Balance AI and Humans

To get the best of both worlds — leveraging AI’s strengths while minimizing its weaknesses — organizations should follow these strategies:

- Hybrid Security Strategy: Use AI for repetitive, high-volume tasks but keep humans in the loop for validation and decision-making.

- Continuous Model Training & Validation: Retrain AI systems frequently using real-world data and include human feedback loops.

- Explainable AI (XAI): Prioritize models that clearly justify their outcomes to maintain compliance and build trust.

- Strong Governance, Ethics & Privacy Controls: Establish clear policies for data use, monitoring, and auditing aligned with regulations.

- Skilled Human Resources: Train cybersecurity teams in AI literacy, forensics, and threat intelligence to strengthen oversight.

- Risk-Based Prioritization: Deploy AI where it provides value and rely on humans for high-impact, complex decision-making.

- Robust Incident Response: Ensure human-led contingency plans exist for AI failures or misclassifications.

Empower Humans with AI, Not Replace Them

CyberCube’s hybrid defense framework merges AI precision with human intelligence — ensuring accountability, transparency, and resilience.

Moving Forward with a Hybrid Defence

AI in cybersecurity is here to stay. Its reputation for speed, scale, and automation is a huge asset in many aspects of defence. However, the risks of overreliance, misplaced trust, and ethical or privacy problems mean that machines by themselves cannot defend against all threats.

The organisations who will be successful will be those who have developed a hybrid defence model — using AI tools to do the heavy lifting of detecting threats, but infusing humans into the response with accuracy, judgement and accountability. It's not about them winning or losing, and it's not about AI versus human. It's about how they work together.

Are you seeking expert, human-driven cybersecurity services?

We specialise in bespoke, human-driven solutions which address difficult cybersecurity challenges. Their team of experts brings to bear deep contextual insight, critical thinking, and strategic capability to help organisations deal with today’s evolving threat landscape. We are your partner to ensure that the foundation of your defence strategy is built on human excellence.

Build a Smarter, Human-Centric Cyber Defense

Partner with CyberCube to design AI-driven security systems that enhance—not replace—human intelligence and accountability.

Talk to CyberCube Experts