Cyber attackers are no longer the faceless hackers of the past— they are now utilizing AI technology to impersonate your coworkers, imitate your CEO's voice, and are invading your organization’s digital habits with scary precision. Welcome to the era of AI-powered phishing attacks, where social engineering has evolved into a high-speed, high-tech operation.

Before we jump into what is new, let’s address the basics:

What is a phishing attack?

A phishing attack is a type of cybercrime where attackers impersonate trustworthy entities, usually through emails, messages, or phone calls, to gain sensitive information such as passwords, banking information, or corporate credentials from victims.

Why is it called phishing?

Phishing comes from 'fishing,” where attackers cast a deceptive hook and wait for victims to “bite.” The hacker culture stylized it with a “ph,” drawing roots from early phone hacking known as "phreaking."

Now, phishing has gone far beyond suspicious emails with spelling mistakes. In 2025, it’s not just about spotting typos or shady URLs—it’s about defending against machine-generated deception that sounds, looks, and feels alarmingly real.

The Evolution of Phishing: From Spam to Smart Attacks

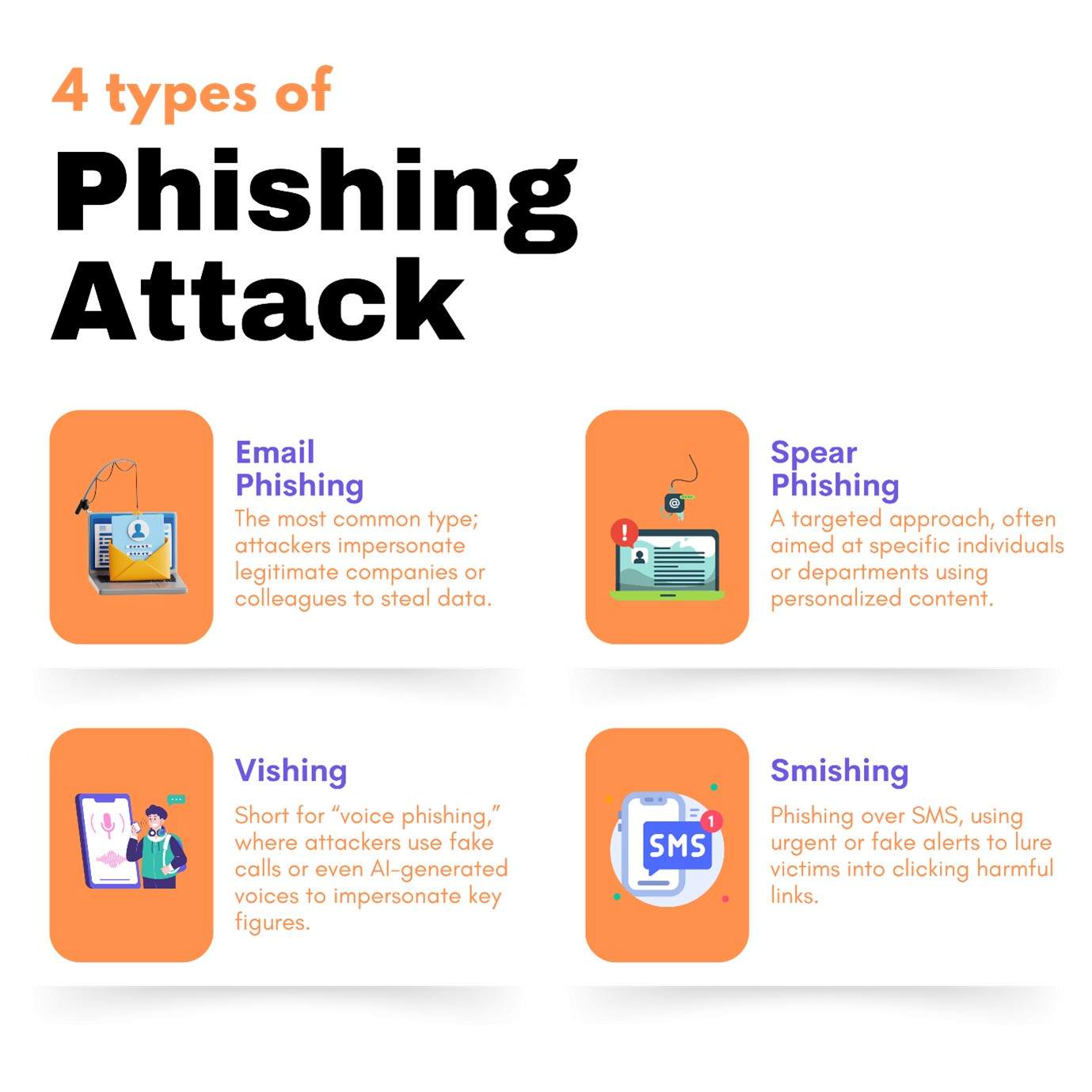

Phishing isn’t new—but it’s never been this smart. Traditional phishing emails used generic language and poor grammar to lure victims into clicking malicious links. And do you know there are types of phishing attacks? If not, let’s look at them now

let’s look at an example of a phishing attack? Imagine a finance team member receiving an email appearing to come from the CEO, referencing a real vendor and invoice. The email urges immediate payment. Everything checks out, but wait! it’s fake. The attacker used scraped data to make it believable and generative AI to craft a flawless message.

In 2025, however, phishing has evolved far beyond these basic categories. Attackers now use generative AI tools to:

- Write grammatically perfect, context-aware emails.

- Scrape social media to personalize content.

- Generate deepfake audio and video to impersonate trusted individuals.

- Launching real-time chat assaults via AI chatbots.

This is not simply a change in tactics. It is a totally new threat environment.

Phishing Resilience Audit

Assess your organization’s readiness against AI-powered social engineering with a tailored gap review.

How Threat Actors Are Using AI to Create Targeted attacks

AI models, such as GPT and many other open-source tools, have become invaluable tools for cybercriminals. Here are the ways they are using AI in phishing attacks:

-

Hyper-Personalization at Scale — Attackers extract data from LinkedIn, CRM leaks, or even company websites to generate contextually relevant messages. The email doesn’t just use your name—it references a recent project, a known colleague, or a specific business deal.

-

Voice and Video Deepfakes — Impersonating an executive has never been easier. With text-to-speech AI and publicly available videos, attackers are generating fake voice notes and video calls. Imagine receiving a voice message from your CFO asking for urgent invoice processing—it sounds real, but it’s fake.

-

Adaptive AI Chatbots — These bots can make conversations with victims. If an employee replies to a phishing email, an AI chatbot can continue the conversation in real-time, adjusting its tone and language dynamically—just like a human.

-

Bypassing Traditional Filters — Most email security tools are trained on known phishing templates. AI-generated content doesn’t follow a fixed pattern, making it harder for traditional tools to flag it as malicious.

Case Study: The Deepfake CEO Scam That Almost Worked

In Q1 2025, a European manufacturing company almost fell victim to an AI-powered voice phishing (vishing) scam. The CFO received a voice message via WhatsApp from someone who sounded exactly like their CEO, urgently requesting a $250,000 payment for a confidential acquisition deal.

What gave it away? A lucky phone call. The CFO decided to verify the request with the CEO directly, only to discover no such deal existed.

Post-incident analysis showed the attacker had trained a voice cloning model using public speeches and interviews of the CEO. The message was generated using an AI script and delivered within minutes of scraping the data.

This is just one of many attacks surfacing in 2025.

AI Threat Simulation Workshop

Train your teams to spot and respond to deepfake and AI-driven phishing before it strikes.

Defensive Measures: Tools and Frameworks to Detect AI Phishing

Defending against AI-powered phishing requires a multi-layered approach. Here’s what works in 2025:

-

AI vs. AI: Deploy AI-Powered Detection

Just as attackers use AI to craft phishing, defenders must use AI to detect anomalies in language patterns, sender behaviour, and content tone.

Tools like:

- Darktrace

- Microsoft Defender AI Enhancements

- Abnormal Security

-

Human Layer Security

Regular phishing awareness training is no longer enough. Modern organizations are investing in:

- Real-time phishing simulations using AI.

- Deepfake detection workshops.

- Zero-trust verification for high-value approvals.

-

Voice and Video Verification Protocols

Implement a verification protocol that requires face-to-face or multi-factor approval for any transaction or instruction involving money or access changes especially when requested via email, WhatsApp, or phone calls.

-

Threat Intelligence Integration

Real-time threat intel feeds offer awareness of active threat intelligence. Now, many AI-enabled attacks exhibit malicious behaviour around events, such as false COVID alerts, compliance warnings, and urgent tax changes.

By combining cyber threat intelligence with your SIEM or SOAR platforms, you may detect threats and harmful trends before there is a compromise.

The biggest challenge? These attacks don’t follow traditional IOCs (Indicators of Compromise). The focus must shift from reactive defense to predictive analytics and human-aware security programs.

Future-Proofing Your Human Layer: Awareness in the AI Age

Cybersecurity in 2025 isn’t just about firewalls or patching—it’s about people. But empowering your people requires more than policies.

Here’s how to build resilience:

- Encourage curiosity over compliance, employees should feel safe asking “Is this real?”.

- Make awareness campaigns relatable, real-time, and role-based.

- Include AI deception drills as part of annual security exercises.

Organizations that blend human intuition with AI-driven defense will lead the charge against this new wave of social engineering.

The line between real and fake is rapidly blurring. AI-powered phishing isn’t a prediction—it’s already here. And it's getting smarter, faster, and more convincing each day.

2025 is not the year to wait and see. It’s the year to adapt, respond, and lead with cyber resilience.

Get Ahead of AI-Powered Phishing

We’ll help you simulate, detect, and defend against next-generation phishing using AI-powered protection strategies.

Talk to CyberCube