Artificial intelligence is now embedded in everything—banking, healthcare, SaaS platforms, internal automation, customer service, and even security operations.

But while boards and regulators focus on AI models, data usage, and governance, attackers focus on something far more practical:

The APIs That Power AI Systems

In 2026, most AI systems do not operate in isolation. They depend on APIs for:

- Data ingestion

- Model inference

- Decision execution

- Integrations with business systems

- User interaction

That makes APIs the control plane of AI—and also its weakest link.

Yet in many organizations, API security still sits under traditional web testing programs, treated as a secondary concern rather than a primary AI risk.

This gap is already being exploited.

Want an AI API Risk Snapshot?

We’ll identify AI-related APIs, trust boundaries, and the abuse paths that create business risk first.

Regulators Care About AI — Attackers Care About APIs

Regulatory frameworks worldwide are catching up to AI risk. Governance, model transparency, and data handling are under scrutiny.

But breaches rarely happen through the model itself. They happen through:

- Unsecured inference APIs

- Exposed data pipelines

- Broken authorization in AI-powered workflows

- Overprivileged service tokens

- Undocumented endpoints feeding models

From an attacker’s perspective, APIs are the easiest path to:

- Manipulate AI decisions

- Extract sensitive training or operational data

- Abuse automation at scale

- Bypass business controls without touching the UI

The more AI is integrated into core business processes, the more API misuse becomes business risk.

API Security: The Missing Control in Most AI Risk Management Programs

Most AI risk programs focus on:

- Model accuracy

- Bias

- Explainability

- Data privacy

- Governance documentation

Very few focus on how AI is actually accessed and controlled in production.

In reality, AI systems expose:

- Inference APIs

- Orchestration APIs

- Feedback loop APIs

- Partner-facing AI integrations

- Internal automation endpoints

These APIs are not just data pipes—they control decisions, actions, and outcomes.

If an API allows a user (or a service identity) to:

- Replay requests

- Manipulate parameters

- Bypass approval flows

- Escalate privileges

- Access other users’ data

Then the AI system becomes an attack amplifier, not an innovation engine.

Stop Treating API Security as Secondary

AI risk is operational risk. Secure the endpoints that control decisions and automation.

AI, APIs and the Expanding Attack Surface

AI has dramatically increased the number of exposed APIs inside organizations.

What used to be a single application is now:

- Multiple microservices

- AI pipelines

- Third-party model providers

- Data brokers

- Orchestration layers

- Automation bots

Each connection is an API. Each API is a potential control failure.

Traditional security programs were built for visible applications and linear workflows. AI systems, however, operate through non-linear decision trees and chained APIs—where one small authorization flaw can cascade into full compromise.

This is why so many AI-related breaches look “simple” in hindsight: the logic was never tested end-to-end.

Why Traditional Security Testing Is Failing AI Systems

Traditional VAPT was designed for a different era:

- Browser-first applications

- Visible user flows

- Predictable actions

- Isolated endpoints

AI systems break all those assumptions.

Security tools often test:

- Login

- Basic authorization

- Known endpoints

- Surface-level vulnerabilities

What they miss:

- Undocumented AI endpoints

- Service-to-service trust assumptions

- Workflow manipulation

- Decision replay attacks

- Role confusion between human and machine users

- Excessive data exposure in model responses

Automation-heavy testing also struggles here because AI risk is contextual. Understanding whether a decision can be abused requires reasoning, not just pattern matching. This is where most testing stops—and where attackers start.

Why APIs Are the Real AI Security Boundary

In 2026, APIs define:

- Who can access AI

- What data AI can see

- Which actions AI can trigger

- How decisions propagate

- Where trust boundaries exist

If an attacker controls an API call, they effectively control the AI system behind it.

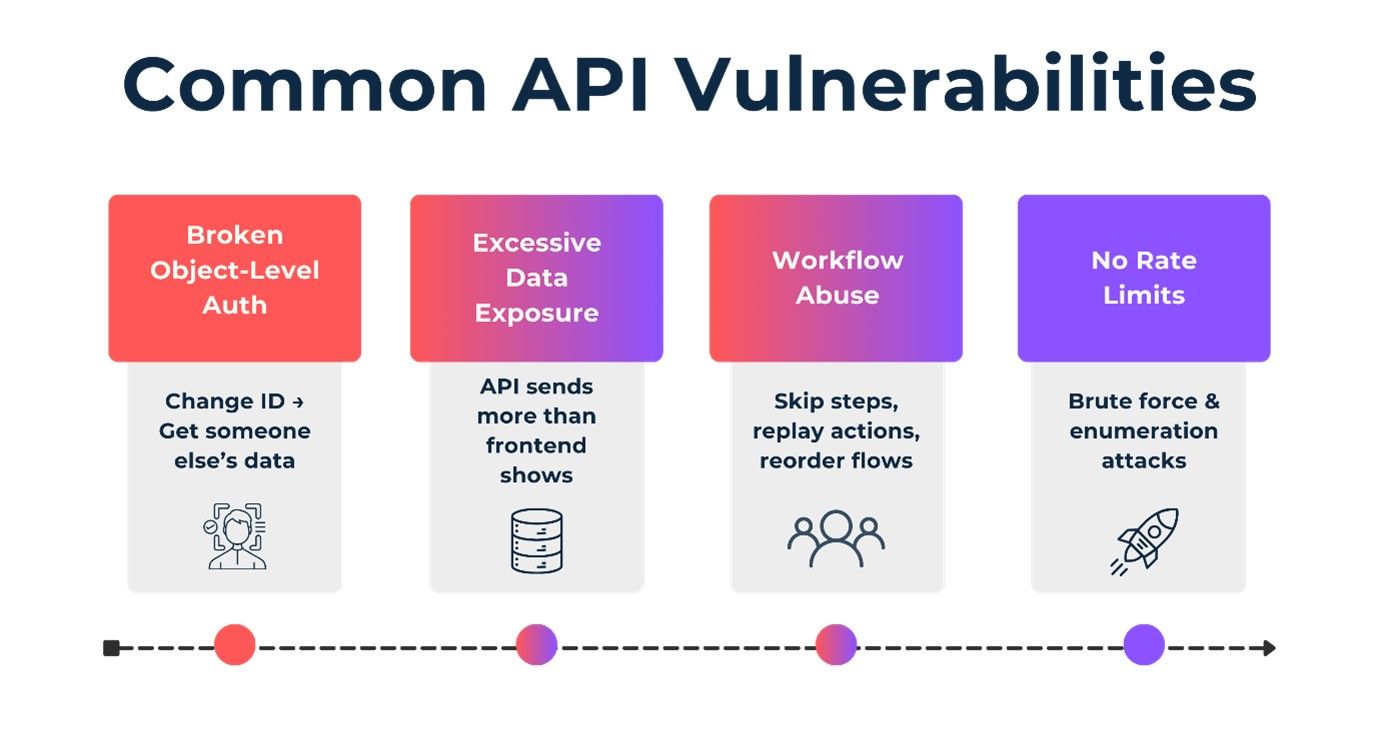

That is why modern breaches are not about exploiting models—they are about exploiting logic. Broken object-level authorization, excessive data exposure, workflow abuse, and missing rate limits remain the most common failures—now with far greater impact because AI automates them at scale.

How APIs Are Exploited in the Real World

Most API breaches are not technically complex. They succeed because logic assumptions go untested.

One of the most common examples is broken object-level authorization. An API may allow a user to request a resource by ID, assuming the backend will only return data the user owns. If that check is missing or inconsistent, simply changing an identifier can expose another user’s data.

What CISOs Should Do Differently in 2026

Mature security teams are changing how they think about API and AI risk:

- Start with visibility: You cannot secure AI APIs you do not know exist.

- Test roles, objects, and states—not just endpoints.

- Treat business logic abuse as a primary threat model, not an edge case.

- Validate token scopes, service identities, and trust relationships continuously.

- Test how APIs behave when requests are replayed, steps are skipped, sequences are reordered, inputs are manipulated, and limits are pushed.

Most importantly, stop treating API security as a compliance task and start treating it as core AI risk management.

From Compliance Checkbox to Business Mandate

API security in the age of AI is no longer a technical hygiene issue. It is a business continuity issue.

When AI systems:

- Approve transactions

- Grant access

- Support healthcare decisions

- Automate operations

- Trigger payments

- Recommend actions

Then API failures become executive-level incidents.

The organizations that succeed in 2026 will be those that align security testing with how systems are actually built—not how they used to be built.

AI may be the future of business, but APIs are the infrastructure of that future. If your AI risk program does not explicitly test how APIs can be abused, your most advanced systems will remain your most vulnerable ones.

In 2026, the question is no longer whether you use AI. It is whether you are securing the APIs that control it.

Frequently Asked Questions

1. What makes API security so important when it comes to protecting AI systems?

APIs are used by AI systems to get information, make choices and trigger activities within many applications. If an API does not have proper security, hackers can make changes to the output of an AI model, steal personal identifiable information or abuse an automated workflow without having to touch the model itself. APIs will be the main barrier for security in AI by 2026 therefore they will need to be properly secured if companies want to safely use AI.

2. What are the main differences between API security and traditional application security?

Traditional application security is targeted towards the user interface as well as on-screen workflows while API security is targeted at the backend logic and authorization, data access and the integrity of all workflows. Because an API is not typically seen by a user and at the same time it operates on multiple systems, security flaws in an API will generally have a more significant impact than that of a security flaw that exists at the GUI level.

3. Can AI-based APIs be completely secured using automated tools?

No. Automated tools can help identify known patterns and mis-configured settings but cannot fully understand business logic, decision-making processes or abuse due to context. To properly protect AI-based APIs there must be manual testing, threat modeling and ongoing validation of all workflows, roles and trust relationships established.

4. How often should organizations test APIs that power AI systems?

APIs should be tested whenever changes are made to AI models, data pipelines, or integrations. In addition to periodic deep assessments, organizations should maintain continuous visibility into their API ecosystem to ensure new endpoints and changes do not introduce hidden risk.

Secure the APIs That Control Your AI

Get an AI-aligned API security review: endpoint discovery, workflow abuse testing, token scope validation, and a remediation plan focused on reducing real business risk.

Talk to CyberCube